I honestly forget how to remember AWS Networking. Here are some alternative ways to understanding the principles of networking in AWS…in a more retarded and interesting way. I would recommend training on AcloudGuru first.

FAQ’s / mnemonics / breaking understanding

Do devices in different availability zones in the same VPC communicate with each other? – Yes, as they are the same subnet.

What makes a VPC subnet available to the public internet? – It requires a combination of a Internet Gateway ( a connection to the internet ) being associated with the subnet and an entry in the route table with a destination of 0.0.0.0/0 and a Target of the id of the Internet Gateway. Alternate Thinking – Think of it like the Internet Gateway ( IGW ) as being your cable modem and the route table being connecting your ethernet cable into the right place on your switch.

What makes a VPC Subnet not available to the public Internet for Hackers to attack it? – As stated previously , when the subnet is not associated with an internet gateway and there is no route in the route table to an internet gateway. Alternate Thinking – If you don’t have a cable modem and no cable is connected to it, how can it be connected to the internet? – DUH…its so obvious.

How can i provide the internetz to my EC2 Instances for patching and such without exposing it to the general internet? – Compared to adding a route to the Internet Gateway in your route table, you will use the NAT Gateway instead.

What the hell is a security group as it relates to VPC’s? – A Security groups is essentially the basic Windows Firewall…Defined! Like the Windows Firewall when it is turned on, it denies everyone that comes in to your house, but is more than happy to kick out the very same people…kinda like my inlaws during Thanksgiving…You can vet the inlaws for invitation to your network/home by giving them Ports to Open, like port 443 for HTTPS and port 22 for SSH, and a destination, like your public IP or the addresses of the rest of the neighborhood, for access.

How are Security Groups different from NACL’s? – Security groups are like a firewall you can use at the machine / instance / Virtual Machine level or it can even act as a firewall…for a group of systems. As for NACL’s, think of them like your curmudgeon grandfather, they don’t like anyone…but if you happen to come by they won’t let go of you either as they have to tell you all about their stories from NAM. Since they work at the network level, you have define what traffic is allowed to come in and come out. Grandfather would like to tell you his stories of nam: One Time in Nam…

What is the purpose of a route table? – Its mainly directions on what network a subnet is allowed to talk with another subnet / networking component. Think of it like Google Maps for the network. Give it the wrong directions…and you will be late to your interview. Give it the right directions…you might still get lost, but at least you are going the right direction.

( Sarcastically ) How do i definitely ensure a hacker can access my network from the network? – Spin up an instance in AWS, Use the default password, define the correct security group rules, define the correct NACL rules, correctly attach the internet gateway and make the right route table adjustments, test to make sure it is available on the public internet, and make sure to post the address and password on Reddit!

What are some ways i can connect to my EC2 Instance? – If it is available in a public network with the right NACL and security group rules: A. By using the private SSH Keys you created when the EC2 instance was created and limiting the Security Group Destination to only your public IP. B. Use a VPN such as OpenVPN that connects to the VPC network and SSH into that instance. C. Use a Bastion host which exists in a public Subnet and use it as a jump box to SSH into the private Instance.

If my EC2 instance is in a Private Subnet with No Internet Access…how go i get it internet Access!! – Assuming you need it for things like Windows Updates or would like to upgrade your Linux Instance, you can either attach a NAT Gateway to that particular private subnet and add the NAT Gateway as a target in the Route Table…Or if you connect a site-to-site VPN to that netework, it will not get it from the Internet Directly…rather all the traffic will route through the Site-to-Site VPN Connection

What is a good scenario for VPC Peering? – if the network is not in your AWS Account / AWS Organization, than you use VPC Peering. Company just merge with another Company? VPC Peering! It only works if both the VPC’s are in the same region ( actually you are allowed to do Inter-regional VPC Peering )

How is a static Elastic IP elastic? Can it stretch? – Since AWS says that we should not treat our machines as pets, rather we should treat them as Cattle. Ex. For comparison purposes, lets just assume that DNS Names are the same thing as IP’S ( they are not…but keep reading ). Betsyserver01 has an IP of X. Since AWS is ruthless and does not care if a certain IP is associated with a certain Instance / Name, it will send Betsyerver01 to the glue factory as Betsyserver01 is gonna become Thanksgiving dinner and be done with it. Once Betsyserver02 comes by, it will be given the same identity/IP as Betseyserver01. HOW BRUTAL!

From the guy in the mainframe department, How does an EC2 Instance ( a Machine ) access an S3 Bucket ( NAS / SAN ) – Sadly…this has nothing to do with networking, rather IAM Permissions….ouch! Apparently, word on the street, the machine has to play its ‘Role” and meeting up with the other guy, called the S3 Bucket guy. Only while he is ‘role playing’ can he talk to the bucket. Sounds like someone drank too much water…

What are Load Balancers? – if you are from traditional IT and don’t have to deal with Developers or even the term “DEVOPS” you probably don’t really need to know this concept.

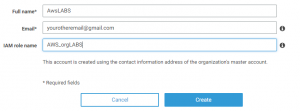

What is a good scenario for Subnet Sharing – If you would like to share the same network with other accounts, especially when using AWS Organizations, allowing you to create guardrails for certain accounts. A good example of this is to have Account X only to be able to deploy to a certain region and subnet.

What is a good scenario for the VPC Transit Gateway? – As it may present certain shared resources, a good way to use it is for a certain entity to own the Active Directory/VPN Infrastructure and share that infrastructure within the conglomerate to the other operating companies.

What is the point of a VPC Endpoint? – Lets use this example with AWS S3 ( the general object storage service) . As VPC’s are all about security and limiting access to who, what and where; the purpose of VPC endpoints is to further restrict that access to only certain networks and protocols. Alternate Thinking – Without VPC Endpoint, the access is accessed willy nilly to anyone with a particular AWS Account / Key. Throw the VPC endpoint resources such as S3 into a particular VPC….suddenly it has the restrictions of that particular VPC…and is a bit more secure.

Formal Documentation: https://docs.aws.amazon.com/vpc/latest/userguide/what-is-amazon-vpc.html

Some Slides for your entertainment: https://www.slideshare.net/AmazonWebServices/aws-networking-fundamentals-145270167

Has one simple diagrams covering network security: https://aws.amazon.com/blogs/apn/aws-networking-for-developers/